UV Mapping

As mentioned in the material, defining colors per vertices will not give us a high enough granularity of colors (unless we have a lot more vertices then otherwise necessary). For this reason we can specify functions or images that can be sampled to get a color value at some position.

The main question is, what position is that?

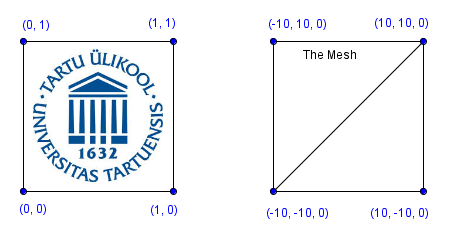

Image used as a texture has its own coordinates called the UV coordinates. These coordinates will range from $[0, 1]$ in both axes of the image. Bottom-left corner is the point with coordinates $(0, 0)$.

Task is to create a mapping from the UV coordinates to the local vertex coordinates (that we know in the shader). Using that mapping we can afterwards sample our colors from the image correctly.

Texture image with its UV coordinates is on the left. If we have a triangulized square with width 20 units and local center at $(0, 0)$ (that have been the walls in our chopper task), then that might resemble something like the image on the right.

In the task there is a perspective camera that is looking straight down on such a square. Your job is to send the correct UV coordinates to the correct vertices as attributes in the shader. Then interpolate them to the fragment shader, where you can use those values to sample from the texture image.

Try to render the texture as repeated 2 times in both directions. Final result might look something like: